Blogs

Implications of the EU AI Act on Risk Modelling

Date

May 28, 2025

In this article, we outline the impact of the AI act on banks, and specifically on risk management. We guide you through AI Act timelines and definitions, and end with practical examples within credit risk and next steps.

EU AI Act timeline & key dates

The AI Act is a part of the EU’s digital regulation framework, which aims to create a comprehensive framework to address the complexities and potential risks associated with AI systems and establishes a risk-based compliance framework.

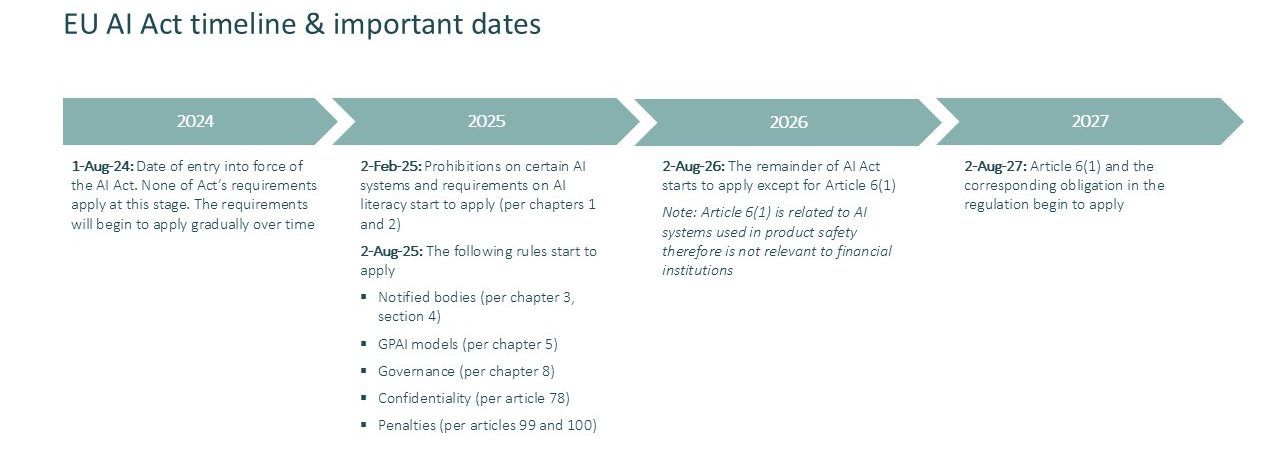

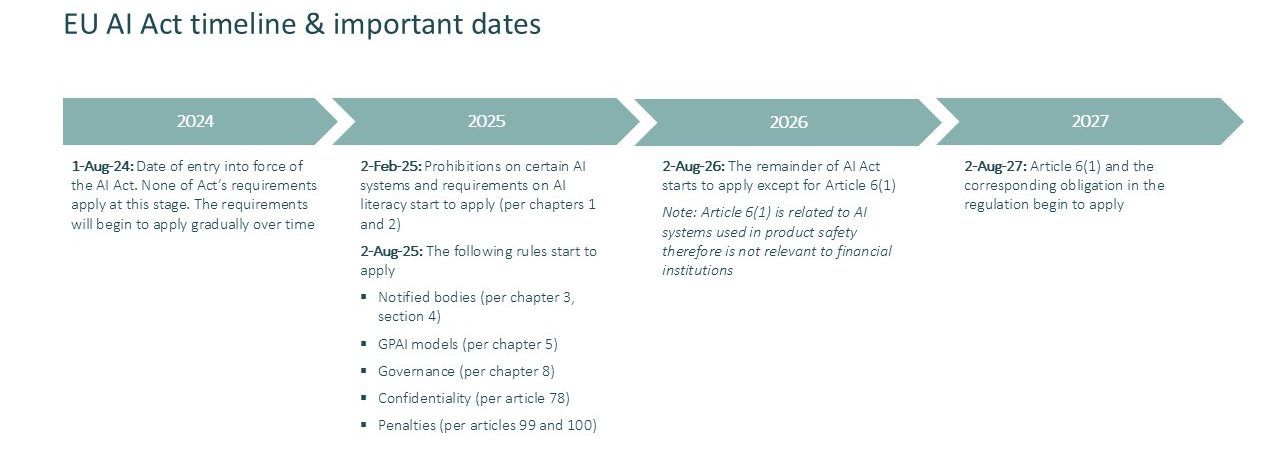

In the next 3 years, the European Commission (EC) will roll out a series of guidelines that will further define the regulatory framework around the implementation of the AI Act (not only relevant for financial institutions). Figure-1 articulates when the requirements will come into effect. We narrowed the scope of the timeline below to only include key dates in relation to the kickoff of requirements and obligations with respect to risk categories.

How financial institutions should deal with the regulations and obligations is shaped by an organisation’s role as a provider and/or a deployer, and how many of their analytics frameworks can be classified as an AI system. It must be emphasised that adjustments are still being made around the language of the act. This means, the commission develops guidelines to clarify vague points and will tighten up scope with respect to practical implementation of the regulations.

EU AI Act definitions & key concepts with respect to risk models

Risk Categories

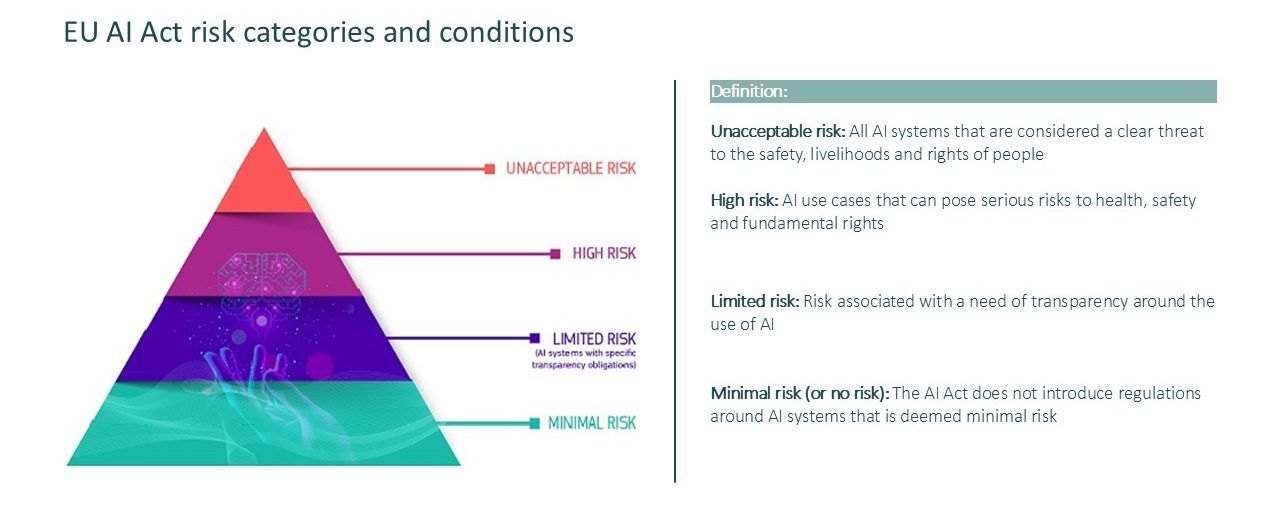

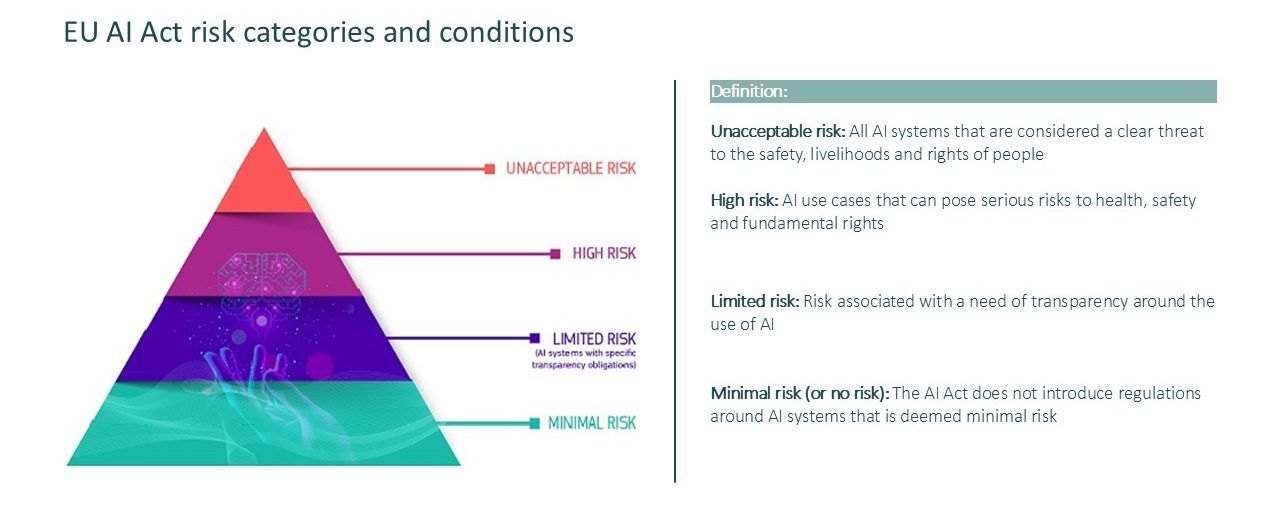

There are four risk categories, namely, unacceptable, high, limited, and minimal risk. Each category comes with its own set of defining criteria and obligations. Figure-2 illustrates the risk categories and their published definitions by the EC.

Both the unacceptable risk and high-risk categories aim to regulate the use of AI around sensitive and not easily quantifiable topics such as personal safety and freedom, fundamental rights, and discrimination. Therefore, it is essential for financial institutions to be mindful of the following:

- Future alterations to these category definitions may change their scope, including risk models in the unacceptable or high-risk categories.

- Any substantial changes to existing models, which are grandfathered if in use prior to August 2026, can lead to models needing to comply with AI Act requirements.

The grouping of AI/machine learning (ML) implementations by EC’s risk categories is driven by following factors (not exhaustive):

- intended use

- sensitivity of data features used in training

- target variable definition (if relevant)

- method complexity

- business line model owner

As an example, credit acceptance models for private individuals are considered “high-risk” because they impact persons’ access to the financial system. Credit acceptance for business entities is considered “minimal risk” since the affected entity is commercial.

AI Systems

The AI Act has a broad definition around what an AI System is, and which advanced analytics (AA) frameworks can be categorised as such. Under Chapter I, article 3 an AI system is defined as follows:

‘AI system’ means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

This wording covers the majority of AI techniques leveraged by financial institutions across various lines of businesses. For example, customer support in private and corporate banking, AML, HR, risk management, and IT. While there is an on-going push from private parties to make amendments to the existing language or to make the definition of AI system narrower, we have not encountered a reasonable argument that would lead the European Commission (EC) to pursue such exceptions.

As a result, we recommend that financial institutions track and manage their AI ecosystem with the appropriate risk and compliance support and governance structures to adhere to AI Act.

Provider/Deployer Roles

Definitions of provider and deployer are stated, under the EU AI Act, as follows [2]:

- Provider: A natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that has an AI system or a general-purpose AI model developed and places it on the market or puts the AI system into service under its own name or trademark, whether for payment or free of charge.

- Deployer: A natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity.

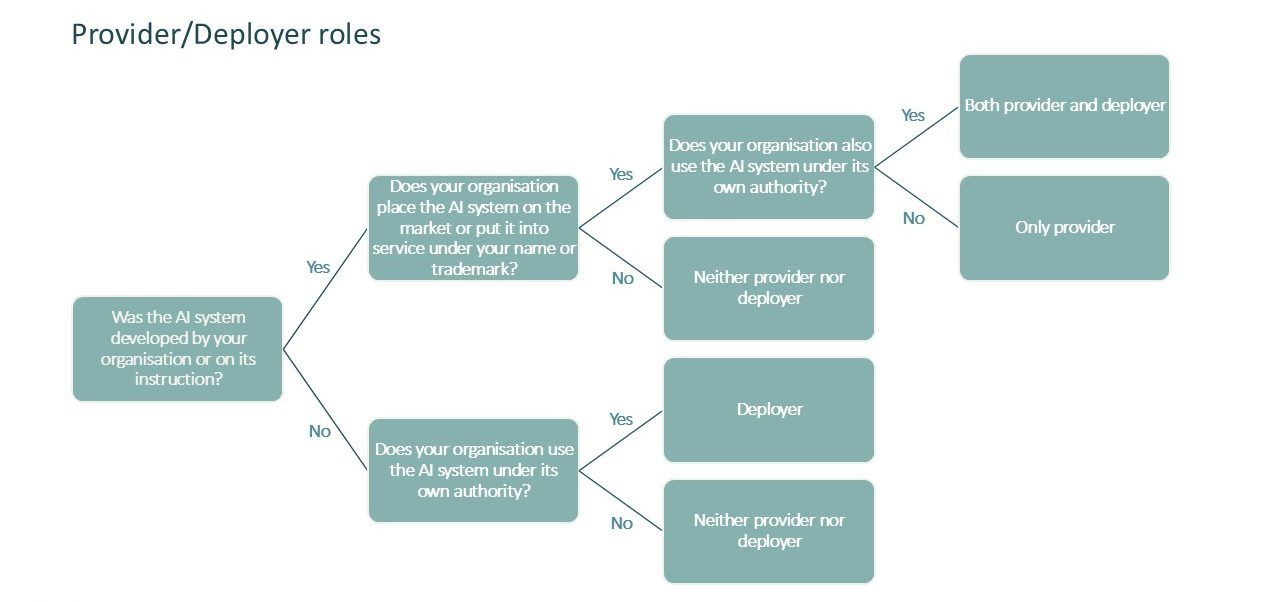

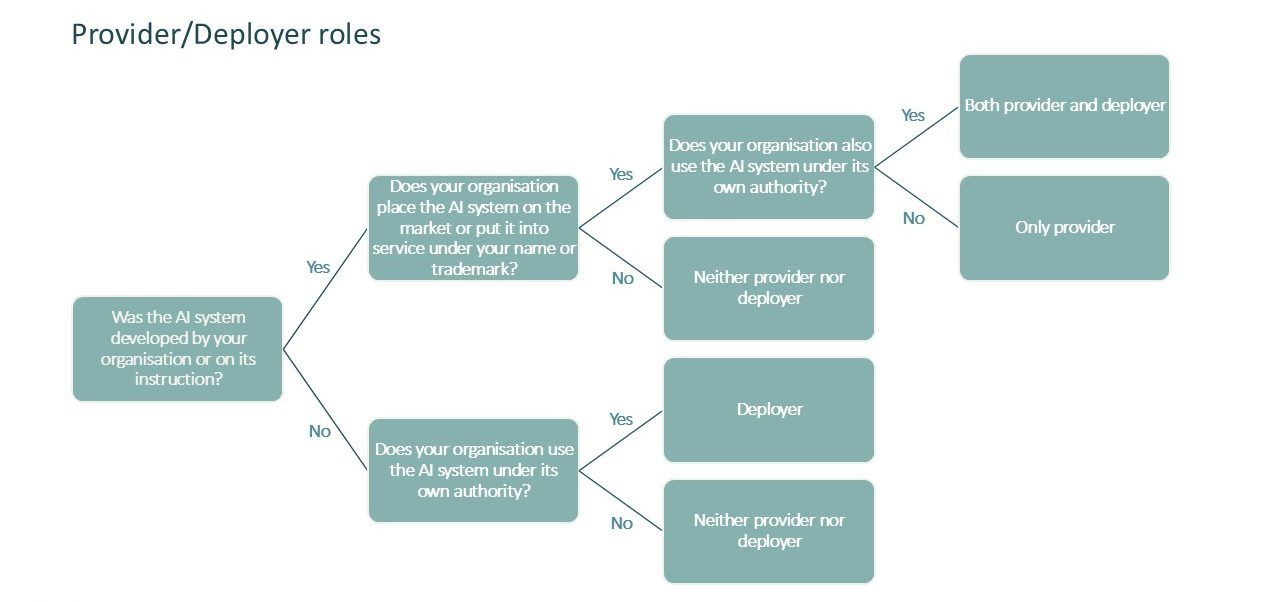

Our risk and compliance experts propose the following thought framework, as shown in Figure-3, to help financial organisations determine whether they are labeled as provider and/or deployer of any AI system registered within their ecosystem.

Financial institutions should install a registry of AI systems, where they assess the risk categorisation and their provider/deployer status for each AI system. It is important to note that if you are both provider and deployer, you need to meet the obligations of both roles.

Expected consequences for risk modeling: Practical examples

The AI Act makes clear that AI systems used to calculate regulatory capital requirements for financial institutions, such as banks and insurers, should not be considered high-risk under the AI Act (recital 85). However, AI systems used to evaluate the creditworthiness or establish the credit score of natural persons, and AI systems used for risk assessment and pricing in relation to natural persons in the case of life and health insurance, are high-risk AI systems under the AI Act (AI Act, Annex III).

The EC guidelines on the definition of an AI system do not explicitly exclude logistic regression models often used for credit risk modelling from the definition of an AI system, as the examples of logistic regression in mathematical optimisation methods have a different context. So, it is best to treat logistic credit risk models as AI systems.

In practice, the AI Act imposes no significant extra requirements for banks for capital models as these are not high-risk under the AI Act. However, the high-risk use cases of credit scoring or creditworthiness assessments of natural persons, are often closely linked to the PD model used for capital which need to meet strict criteria already, and the required conformity assessment can be based on internal control which for banks is already in place. The EC still needs to publish guidelines on the practical implementation of high-risk AI systems about risk management system, data and data governance, technical documentation, record keeping, transparency and information for deployers, human oversight, and accuracy. But in our view, given the requirements already existing for banks, these are not expected to introduce surprises.

With respect to credit scoring and creditworthiness assessment, it is also important to be aware that per the EC guidelines on Prohibited AI Practices and based on case law of the European Court of Justice, AI systems assessing the probability of a natural person repaying a loan are considered ‘profiling’ and hence social scoring. An assessment against the Prohibited AI Practice in Article 5.1(c) should be made.

What should you do now?

To summarise, only some models impacting natural persons are categorised as high-risk models by the EU AI Act. However, we recommend financial institutions to take action in the short term. It is important to set-up an AI strategy from a risk management perspective, to showcase how your organisation views AI Risk. Here are 3 concrete first steps to help you move towards compliance with the Act:

- Craft an implementation roadmap to a functional AI registry including buy, build, or re-use decision;

- Roll out AI literacy training and AI skill development in first line and second line of defense;

- Create an inventory of potential high-risk AI systems and check what information needs to be registered in the EU database.

Of course, AI Risk is broader than compliance with the AI Act. So after these three important actions in the short term, financial institutions should make sure that the AI Strategy from a risk perspective is crystal clear.

A clear actionable AI Strategy enables organisations to implement AI effectively and responsibly, creating real impact with AI.

| Index | Source | Source |

| European commission, July-12-2024 | European approach to artificial intelligence: AI Act | |

| Guidelines on the definition of an artificial intelligence system established by AI Act, February-06-2025 | https://digital-strategy.ec.europa.eu/en/library/commission-publishes-guidelines-ai-system-definition-facilitate-first-ai-acts-rules-application |

Date

May 28, 2025

In this article, we outline the impact of the AI act on banks, and specifically on risk management. We guide you through AI Act timelines and definitions, and end with practical examples within credit risk and next steps.

EU AI Act timeline & key dates

The AI Act is a part of the EU’s digital regulation framework, which aims to create a comprehensive framework to address the complexities and potential risks associated with AI systems and establishes a risk-based compliance framework.

In the next 3 years, the European Commission (EC) will roll out a series of guidelines that will further define the regulatory framework around the implementation of the AI Act (not only relevant for financial institutions). Figure-1 articulates when the requirements will come into effect. We narrowed the scope of the timeline below to only include key dates in relation to the kickoff of requirements and obligations with respect to risk categories.

How financial institutions should deal with the regulations and obligations is shaped by an organisation’s role as a provider and/or a deployer, and how many of their analytics frameworks can be classified as an AI system. It must be emphasised that adjustments are still being made around the language of the act. This means, the commission develops guidelines to clarify vague points and will tighten up scope with respect to practical implementation of the regulations.

EU AI Act definitions & key concepts with respect to risk models

Risk Categories

There are four risk categories, namely, unacceptable, high, limited, and minimal risk. Each category comes with its own set of defining criteria and obligations. Figure-2 illustrates the risk categories and their published definitions by the EC.

Both the unacceptable risk and high-risk categories aim to regulate the use of AI around sensitive and not easily quantifiable topics such as personal safety and freedom, fundamental rights, and discrimination. Therefore, it is essential for financial institutions to be mindful of the following:

- Future alterations to these category definitions may change their scope, including risk models in the unacceptable or high-risk categories.

- Any substantial changes to existing models, which are grandfathered if in use prior to August 2026, can lead to models needing to comply with AI Act requirements.

The grouping of AI/machine learning (ML) implementations by EC’s risk categories is driven by following factors (not exhaustive):

- intended use

- sensitivity of data features used in training

- target variable definition (if relevant)

- method complexity

- business line model owner

As an example, credit acceptance models for private individuals are considered “high-risk” because they impact persons’ access to the financial system. Credit acceptance for business entities is considered “minimal risk” since the affected entity is commercial.

AI Systems

The AI Act has a broad definition around what an AI System is, and which advanced analytics (AA) frameworks can be categorised as such. Under Chapter I, article 3 an AI system is defined as follows:

‘AI system’ means a machine-based system that is designed to operate with varying levels of autonomy and that may exhibit adaptiveness after deployment, and that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments.

This wording covers the majority of AI techniques leveraged by financial institutions across various lines of businesses. For example, customer support in private and corporate banking, AML, HR, risk management, and IT. While there is an on-going push from private parties to make amendments to the existing language or to make the definition of AI system narrower, we have not encountered a reasonable argument that would lead the European Commission (EC) to pursue such exceptions.

As a result, we recommend that financial institutions track and manage their AI ecosystem with the appropriate risk and compliance support and governance structures to adhere to AI Act.

Provider/Deployer Roles

Definitions of provider and deployer are stated, under the EU AI Act, as follows [2]:

- Provider: A natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model or that has an AI system or a general-purpose AI model developed and places it on the market or puts the AI system into service under its own name or trademark, whether for payment or free of charge.

- Deployer: A natural or legal person, public authority, agency or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity.

Our risk and compliance experts propose the following thought framework, as shown in Figure-3, to help financial organisations determine whether they are labeled as provider and/or deployer of any AI system registered within their ecosystem.

Financial institutions should install a registry of AI systems, where they assess the risk categorisation and their provider/deployer status for each AI system. It is important to note that if you are both provider and deployer, you need to meet the obligations of both roles.

Expected consequences for risk modeling: Practical examples

The AI Act makes clear that AI systems used to calculate regulatory capital requirements for financial institutions, such as banks and insurers, should not be considered high-risk under the AI Act (recital 85). However, AI systems used to evaluate the creditworthiness or establish the credit score of natural persons, and AI systems used for risk assessment and pricing in relation to natural persons in the case of life and health insurance, are high-risk AI systems under the AI Act (AI Act, Annex III).

The EC guidelines on the definition of an AI system do not explicitly exclude logistic regression models often used for credit risk modelling from the definition of an AI system, as the examples of logistic regression in mathematical optimisation methods have a different context. So, it is best to treat logistic credit risk models as AI systems.

In practice, the AI Act imposes no significant extra requirements for banks for capital models as these are not high-risk under the AI Act. However, the high-risk use cases of credit scoring or creditworthiness assessments of natural persons, are often closely linked to the PD model used for capital which need to meet strict criteria already, and the required conformity assessment can be based on internal control which for banks is already in place. The EC still needs to publish guidelines on the practical implementation of high-risk AI systems about risk management system, data and data governance, technical documentation, record keeping, transparency and information for deployers, human oversight, and accuracy. But in our view, given the requirements already existing for banks, these are not expected to introduce surprises.

With respect to credit scoring and creditworthiness assessment, it is also important to be aware that per the EC guidelines on Prohibited AI Practices and based on case law of the European Court of Justice, AI systems assessing the probability of a natural person repaying a loan are considered ‘profiling’ and hence social scoring. An assessment against the Prohibited AI Practice in Article 5.1(c) should be made.

What should you do now?

To summarise, only some models impacting natural persons are categorised as high-risk models by the EU AI Act. However, we recommend financial institutions to take action in the short term. It is important to set-up an AI strategy from a risk management perspective, to showcase how your organisation views AI Risk. Here are 3 concrete first steps to help you move towards compliance with the Act:

- Craft an implementation roadmap to a functional AI registry including buy, build, or re-use decision;

- Roll out AI literacy training and AI skill development in first line and second line of defense;

- Create an inventory of potential high-risk AI systems and check what information needs to be registered in the EU database.

Of course, AI Risk is broader than compliance with the AI Act. So after these three important actions in the short term, financial institutions should make sure that the AI Strategy from a risk perspective is crystal clear.

A clear actionable AI Strategy enables organisations to implement AI effectively and responsibly, creating real impact with AI.

| Index | Source | Source |

| European commission, July-12-2024 | European approach to artificial intelligence: AI Act | |

| Guidelines on the definition of an artificial intelligence system established by AI Act, February-06-2025 | https://digital-strategy.ec.europa.eu/en/library/commission-publishes-guidelines-ai-system-definition-facilitate-first-ai-acts-rules-application |

Talk to our experts

Let's create real impact together with data and AI

Financial Services Lead

Henriette Claus

Talk to our experts

Let's create real impact together with data and AI

Financial Services Lead

Henriette Claus