Our client, a major international insurance company, wanted to boost customer engagement and satisfaction using the latest AI technology. This led to the development of an AI-powered chatbot designed to interact and assist users on their website. Beyond just building the chatbot, a key challenge was managing the risks associated with deploying this new system. Keep reading to discover how we worked closely with our client to identify the system’s needs and successfully bring this innovative solution to life.

Executive Summary

Challenge

Transforming customer support for a large insurance company seeking 24/7 service while mitigating costs.

Approach

Strategically initiating the use of LLM-powered chatbots, starting with domain-specific contexts, and ensuring compliance with regulations.

Implementation & Solution

Utilising a two-model setup with LLMs and Retrieval Augmented Generation for accurate responses, deploying on Azure Cloud, and maintaining flexibility with a mixed internal-external development team.

Impact for Client

Achieving 24/7 customer support across domains, engaging users with a user-friendly front-end, and initiating the company’s exploration into AI technology.

Learnings & Challenges

Discovering the importance of risk management from a project’s outset, refining accuracy through context adjustments, and identifying innovative ideas through collaborative brainstorming sessions.

Challenge

Our client, a large international insurance company, was looking to increase the engagement and satisfaction of their customers by providing 24/7 customer support. However, offering such support is costly, prompting them to investigate other options. Consequently, they approached ADC to investigate the possibility of using LLM-powered chatbots to help alleviate the pressure on their support desks and obtain a highly tailored solution that would comply with their corporate values while also engaging their customers.

Approach

Since LLM-powered chatbots are still in the beginning phase of their maturity cycle, we started with creating multiple context-aware chatbots for different support domains. For each of these domains, it was necessary to first evaluate the regulations and policies related to AI and make sure that our solution would be fully compliant.

Subsequently, we determined the functional requirements of the chatbots. We then translated these into technical and implementation requirements relating to the architecture of the chatbot setup and required infrastructure. Afterward, we developed a minimum viable chatbot (MVC) for each domain. These MVCs were then tested through a phased testing approach where each phase was increasingly more public.

Implementation & Solution

Functional Requirements

In collaboration with the client, we established the functional requirements of a customer-facing chatbot. At a high level, these include:

- Performance – Ensuring accurate and relevant responses in an appropriate tone, while filtering out any potentially harmful or biased content.

- Flexibility – Adapting solutions quickly and easily in the fast-evolving environment.

- Data Privacy & Ownership – Prioritising sole client ownership of the data and preventing externals having access to it.

Technical & Implementation Requirements

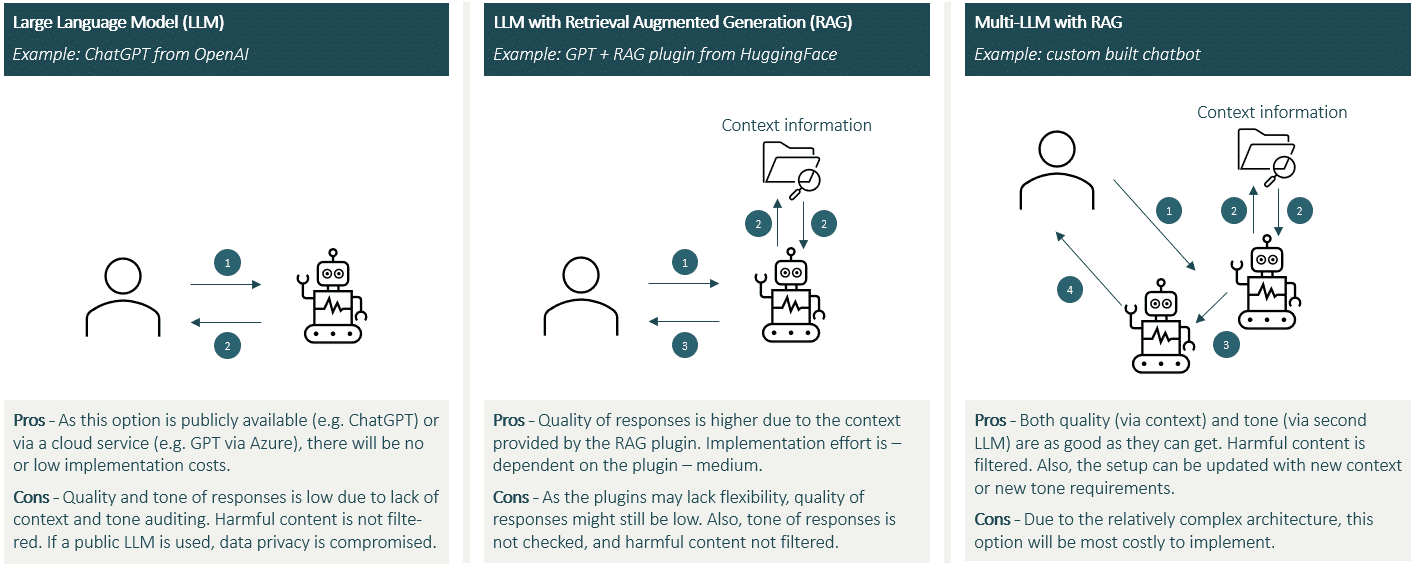

To ensure our chatbot met the performance requirements, we opted for a setup using two language models (LLMs), with the first one enhanced by Retrieval Augmented Generation (RAG). This ‘vanilla LLM’ augmented with RAG empowers the chatbot with specific domain knowledge, allowing it to provide accurate and relevant answers based on context provided by the system developer.

To address concerns about tone, harmful content, and biases, we implemented a second LLM to review and refine responses before reaching the end user. Leveraging our client’s existing use of Azure and Office 365, we chose Azure cloud for this design. The focus on accuracy, relevancy, tone, and content filtering set our architecture apart from a straightforward call to the OpenAI service in Azure.

For flexibility, we decided to implement and maintain the solution with a mix of our internal team and external developers. This, coupled with the use of the RAG framework, allows easy modification of chatbot behaviour by updating prompts and context. Lastly, to ensure data privacy, we decided to host the solution on our client’s cloud servers and virtual network.

Minimum Viable Chatbot (MVC) Development & Testing Phase

Like any customer-facing product, implementing a well-structured incremental testing and roll-out plan was important. So, we worked with a three-phased plan. Following each phase, a dedicated committee evaluated the results and decided whether the chatbots were ready for the next testing phase or required further refinement.

In the first phase, the chatbots were only made available to internal developers, who could access them via a private link with a username and password log-in. The developers were encouraged to test the chatbots, trying to prompt inadequate responses to simulate a worst-case scenario involving a user with malicious intent.

The second phase extended access to friends and family, focusing on refining legal disclaimers on the front-end and qualitative aspects like tone of voice and user-friendliness. The final phase was a limited roll-out, where the chatbots were made available to a select number of customers.

Final Deliverable

For each domain, the client received a user-friendly front-end where the customer can interact with the chatbot, a robust back-end with the infrastructure to host the system and log interactions, and documentation covering the entire system.

Figure 1. Three chatbot architectures of increasing complexity. The leftmost architecture is the simplest but lacks content and does not audit tone or filter harmful content. The rightmost architecture provides context and does the response auditing.

Impact for Client

Through this project, our client achieved significant milestones, including 24/7 customer support on multiple customer support domains, setting them apart from competitors in customer service. Additionally, they now have a tool that sustains user engagement on targeted domains and provides information in a natural and simple way. Importantly, the project successfully mitigated potential risks associated with the technology, while complying with corporate policies.

These steps pave the way for further innovations. The chatbots could be rolled out to other parts of the business. Furthermore, the existing use-cases could be extended and improved. What if – for example – one could use AI to determine when a customer is better serviced by a human? Then the AI system could handle the main body of questions, but for specific questions, a human could be brought into the loop. The possibilities for future innovations are extensive, opening avenues for continued growth and success.

Learnings & Challenges

Every project brings many insights, and here, we will highlight three key learnings:

- Risk management and compliancy should be considered by design. This means that relevant stakeholders should already be included from the design phase onwards, and the developers should be aware of the relevant organisation policies and values throughout the process.

- Since the chatbot heavily relies on the context it receives, ensuring its accuracy and high quality is important. Furthermore, the chatbot should refrain from providing information beyond its given context. Achieving these required significant adjustments to both the architecture and context. Simple changes in instructions can have a big effect on outcomes, so it pays off to take time for experimentation.

- The project demonstrates that it’s possible to safely deploy high-quality customer facing chatbots. This is relevant for a variety of organisations.

Continue the Conversation

Are you interested in discovering how ADC can assist your organisation? Our team has extensive experience in developing solutions that help streamline data management processes and create robust strategies. To learn more, please contact Edward Jansen (Strategy Lead).

What stage is your organisation in on its data-driven journey?

Discover your data maturity stage. Take our Data Maturity Assessment to find out and gain valuable insights into your organisation’s data practices.