Exploring ChatGPT’s Custom Chatbots

Large Language Models (LLMs) like ChatGPT have sparked a wave of excitement and speculation of a future where automated systems can perform complex tasks with unprecedented efficiency and intelligence. ADC previously discussed the capabilities of LLMs to handle complex tasks efficiently and intelligently, potentially revolutionising how businesses and other organisations operate.

This article shows some of the potential capabilities and pitfalls of LLMs like ChatGPT to support credit risk modellers in developing reliable and compliant Internal Ratings Based Models using a custom chatbot, the CreditRiskGPT. OpenAI, the company behind ChatGPT, offers GPTs. Here anyone can create a custom version of ChatGPT that can be tailored to their specific knowledge and needs.

The CreditRiskGPT uses chatGPT-4 augmented with the publicly available EBA ‘Guidelines on PD and LGD estimation (EBA-GL-2017-16)’. It should be noted that the bot is a minimum viable product for an assistant: ADC has not finetuned this model and the output can lead to incorrect conclusions. If you have ChatGPT Plus, CreditRiskGPT is available for you to try out in the GPT store.

Please note that sharing private or organisational information with ChatGPT can lead to breaching your organisation’s policies, and/or GDPR requirements. ADC can support your organisation in setting up a secure cloud environment with LLM functionality, ensuring GDPR compliance.

Testing Capabilities with EBA Q&A 2019_5029 Use Cases

To test CreditRiskGPT’s capabilities we subjected it to two use cases based on EBA Q&A 2019_5029. This is an official answer from the EBA to a question from a financial institution regarding paragraph 89 of the Guidelines on PD and LGD estimation.

The question asks whether overrides in the assignment of obligors to risk grades or pools still need to be taken into account during calibration even if the ranking method or overrides policy has changed. The EBA answer clarifies that historical overrides do have to be taken into account (as per paragraph 89), provided that they are still relevant after the change in policy or ranking method (where this is not feasible, an appropriate adjustment and MoC need to be quantified).

In the first experiment we ask the bot to retrieve information for us. In the second experiment we ask CreditRiskGPT to answer the question on overrides for us and compare the output to the official EBA answer.

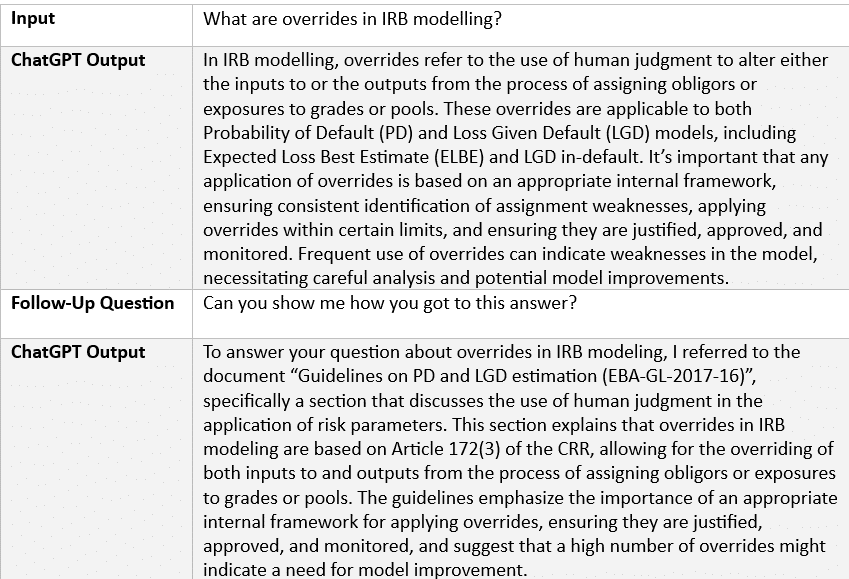

Easy Experiment: Information Retrieval and Summarising

Not bad! With only a combination of six words, the model can generate an accurate, grammatically correct, and flowing piece of unstructured data that is correct. More impressive is that this is generated in only a few seconds. The follow-up question also points to the relevant CRR article regarding overrides.

Even though we cannot fully rely on LLM for assurances purposes, this example shows that we can use them in an iterative way to increase efficiency, quality, and compliance.

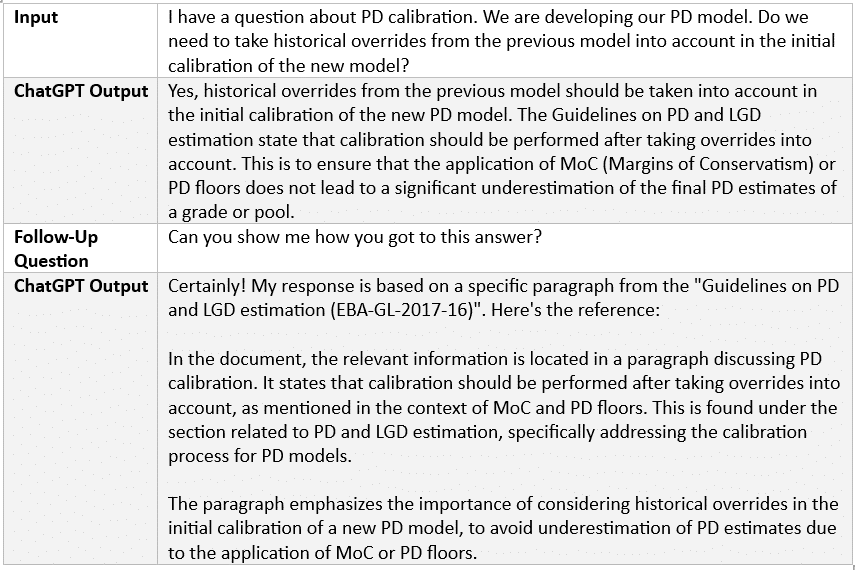

Complex Experiment: Regulatory Interpretation

This use case is a more colloquial rephrasing of the EBA question, stripped of most of its context. The bot is firm in its conclusion that overrides need to be taken into account, but the substantiation of the answer does not specifically address historical overrides associated with a previous model. In fact, the follow-up output makes it clear that this answer is not based in any way on paragraph 89, which is the most relevant section of the EBA guidelines to our question from a subject matter perspective, even if the paragraph it does base its output on is a closer textual fit.

Moreover, the bot does not mention that overrides only need to be taken into account to the extent that they are relevant even though this information can be found at the end of the paragraph of the guidelines that the bot bases its answer on. This is clearly relevant information for the current use case, as at least some historical overrides will likely be made redundant by the change in model.

Finally, the answer mixes up two distinct things: considering overrides during calibration does not help overcome underestimation that can result if MoC and PD floors are applied during calibration.

Overall, we get an answer, but it is not based on the most relevant information, nor is it fully coherent. It would not be very helpful to a credit risk modeller in deciding how to treat historical overrides during a model redevelopment. This example illustrates that while the current generation of LLMs excel in generating text, they in fact have limited reasoning abilities.

Azure Deployment for CreditRiskGPT Privacy and Performance

Although the bot used for this article was a toy example of a custom LLM, it shows good capabilities for retrieving and summarising information.

One can create CreditRiskGPT within a closed environment like Microsoft Azure to offer advantages, particularly in privacy, security, and performance control. Utilising Azure’s infrastructure, a smaller or equally efficient model of CreditRiskGPT can be deployed, tailored specifically for the IRB approach to credit risk in banking. This controlled environment ensures enhanced data privacy and security, vital for handling sensitive financial data. Moreover, being in a closed system allows for fine-tuning the model’s performance, ensuring it aligns closely with regulatory requirements and specific organisational needs.

Much more impressive results could be achieved using an LLM specifically optimised to the specific business, with all relevant documentation added (CRR, RTS on assessment methodology, ECB guide to internal models, etc.) This enables potentially significant productivity gains, as well as better dissemination of knowledge of relevant regulation throughout an organisation.

Nevertheless, this small experiment also showed some important limitations of LLMs when they are used naively in domains requiring deep subject matter expertise and for which little training data is available. Prompts need to be engineered carefully to avoid incorrect conclusions as much as possible [Prompt engineering – OpenAI API]. More importantly, LLMs should not be used as substitutes to domain expertise and critical thinking. We should be careful when asking questions that rely on interpretation and reasoning abilities and answers should always be scrutinised.

Iterative LLM Implementation for Organisational Value

As a Data & AI Consultancy, we believe that we cannot yet use LLMs for decision making or assurance. Using LLMs in an iterative way can boost compliance, efficiency, and/or quality to add value across your organisation.

ADC specialises in the implementation of secure LLM cloud environments. We support organisations with the design and implementation of AI solutions that assist in your work and guide you in the appropriate use of AI in an iterative and value adding manner. The solutions are designed to enhance and support professional tasks in a responsible and ethical manner.

Continue the Conversation

If you would like to learn more about how we can support your organisation in its data journey, get in touch with Gerrit van Eck for more information.

What stage is your organisation in on its data-driven journey?

Discover your data maturity stage. Take our Data Maturity Assessment to find out and gain valuable insights into your organisation’s data practices.